Resources: Evaluation

Resources: Evaluation

Evaluation can be defined both as a means of assessing performance and to identify alternative ways to deliver: as example the new Canadian Federal Evaluation Policy developed by the Treasury Board of Canada defines evaluation as "the systematic collection and analysis of evidence on the outcomes of programs to make judgments about their relevance, performance and alternative ways to deliver them or to achieve the same results.”

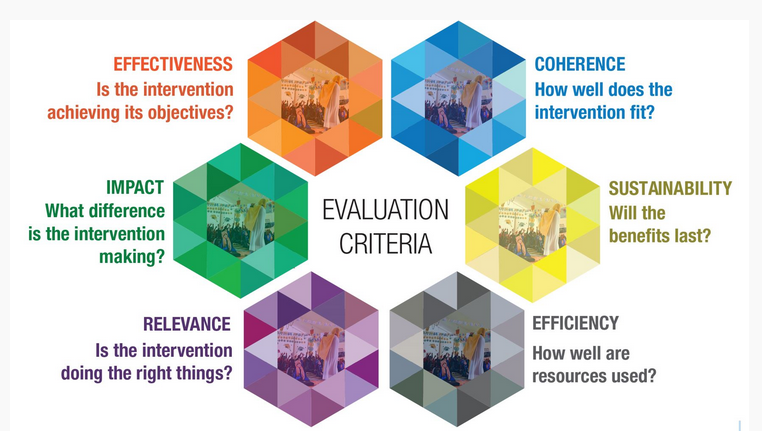

There are many definitions and criteria for evluation, such as the OECD: 'The OECD DAC Network on Development Evaluation (EvalNet) has defined six evaluation criteria – relevance, coherence, effectiveness, efficiency, impact and sustainability.'

The BetterEvaluation Rainbow Framework organises different evaluation options (methods and strategies) in terms of different tasks in an evaluation, organised into seven clusters. Please browse through - it makes evaluation come alive and supports learners through the steps thinking about the theory of change in their programme. You may find it useful if you do want to evaluate a programme.

Donabedian and Kruk and Freedman tell us that we should develop an evaluation framework with a set of specific indicators. When thinking about indicators, consider what makes a good indicator, e.g. "health status" is not a good indicator for outcomes. Why is this? Well, what do you mean by 'health status'? How will you measure it? A neat acronym for developing good indicators is "SMART" - Specific, Measurable, Achievable, Realistic, Timely. An example of this would be "under 5 mortality per 1,000 population per year" - it's specific, you can measure it, you can achieve a reduction in that indicator with your programme so it's achievable and realistic, and you'll be able to collect data on in in the time-frame you need so it's timely.

A simple structure, process, outcome model should include:

1. Inputs/Structure (staff, buildings, systems, policies)

2. Process

- Effectiveness (access and quality for the whole population)

- Equity (access and quality for marginalised groups; participation and accountability)

- Efficiency (adequacy of funding; costs and productivity; administrative efficiency)

3. Outcome

- Effectiveness (health outcomes; patient satisfaction)

- Equity (health outcomes for disadvantaged groups)

- Efficiency (cost-effectiveness of the whole programme)

Here is an example:

Evaluation in the project cycle

There are many different ways to think about the 'project cycle'. Here is one from the United Nations Environment Programme (UNEP) handbook

- Phase 1: Project identification - analyse the situation, identify the project, and prepare the project proposal

- Phase 2: Project formulation and implementation - feasibility assessment, establish baseline and target data, and plan implementation

- Phase 3: Review and approval - multi-stakeholder review and approval of project plan

- Phase 4: Project implementation - project implementation according to plan, monitoring and reporting of data, and risk assessment and management

- Phase 5: Evaluation - mid-course evaluation for amendments and improvements, and end of project evaluation for generation of lessons learned

An Evidence Based Evaluation Framework.

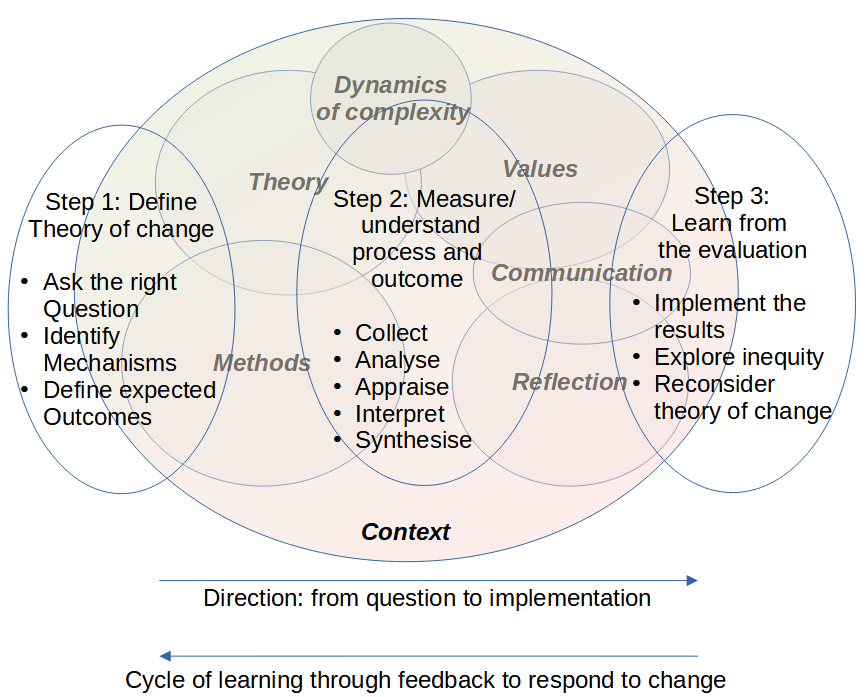

A framework for performing and appraising evaluations has been proposed, building on the experience of Evidence Based Medicine (in press).

In the diagram below, three practical steps are shown as ellipses in the foreground, and the constructs in the background underpinning these steps.

Underpinning these practical steps are a number of constructs which include theory, methods, values, dynamics of complexity, communication, reflection and context. Context is central to all of this and will impact on each of the practical steps and the application of the other constructs. Examples of the competencies required to meet these constructs are presented. Considering both the practical steps and the underpinning constructs allows us to consider ‘learning by doing’ where education can take place in the context of evaluation in practice, as the steps are followed and are informed by learning about the underpinning constructs.