Open publishing: preprints and open reviews

الخطوط العريضة للقسم

-

This course is part of a series of courses offered by the Coalition for Open Publishing of Public Health in Africa (COPPHA). The course initially ran from January to March, 2025, but is now available for self emrolment at any time.

Note: if you want to gain a certificate for completing this course, you will have to create an account and log in as a student.

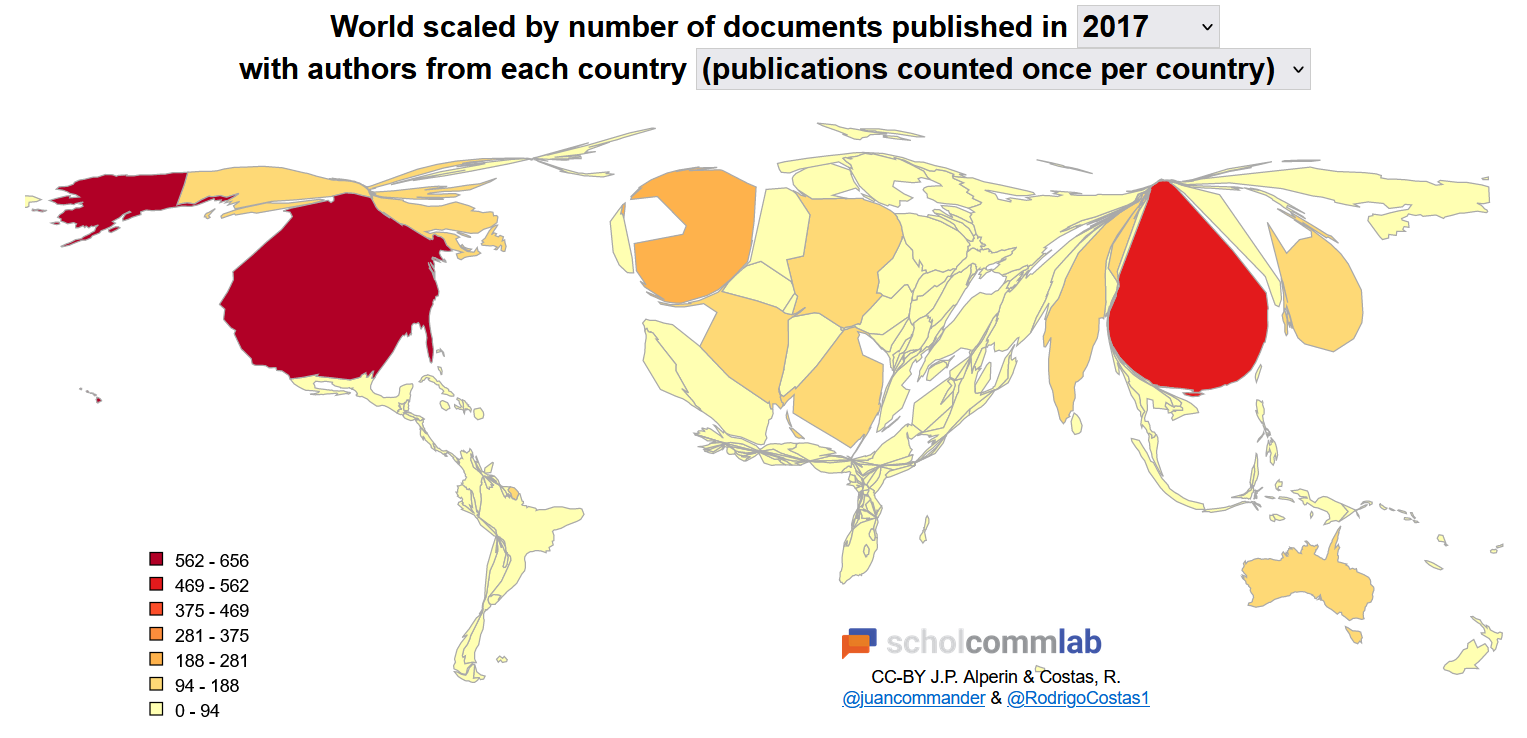

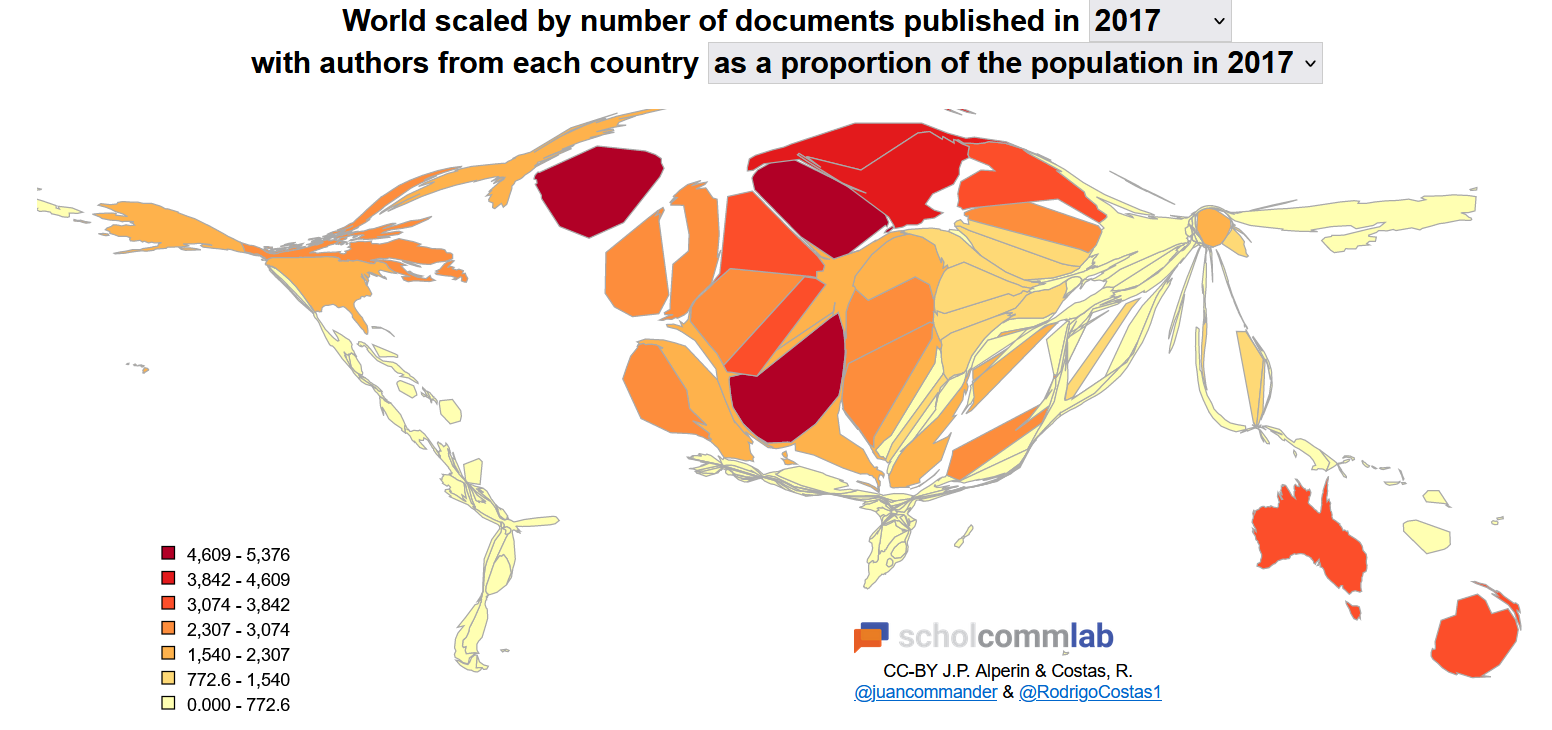

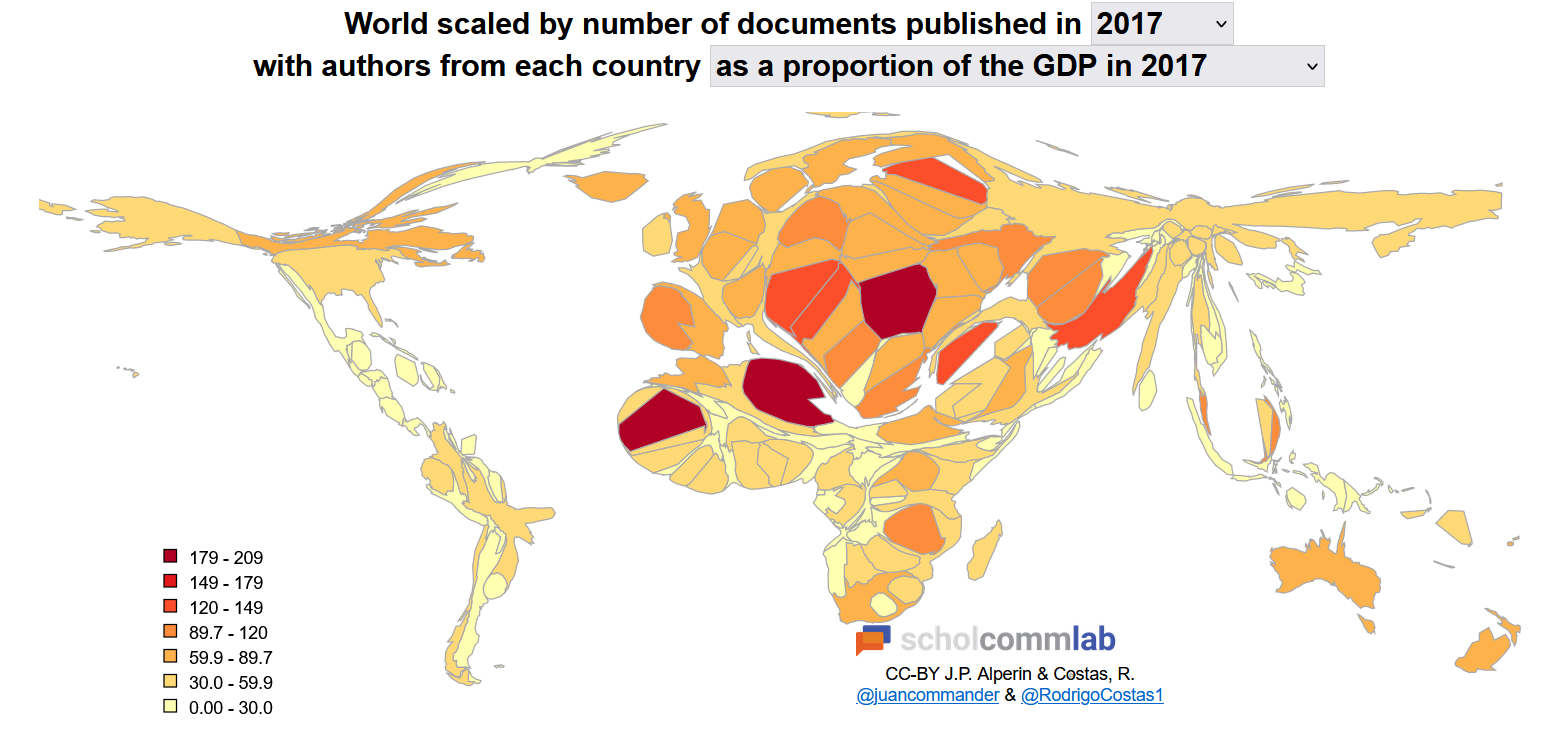

We have discussed some of the problems of the current peer review system in the previous course on Peer reviewing. The problems are each bound up with the publication system for scientific papers. As we have discussed in the Open Science course, research publications from the Global South are low relative to the Global North

These graphs scale the apparent size of each country by the number of documents cited in the Web of Science. When raw numbers are examined by country, there are gross global differences (Figure 1). These differences are attenuated when the numbers are adjusted for the population size of each country, (Figure 2). However, it is not until the figures are adjusted for GDP (Figure 3), that African countries at last become visible in the map.

The patterns may underestimate the geographical disparities as the Web of Science has been criticised for being structurally biased ‘against research produced in non-Western countries, non-English language research, and research from the arts, humanities, and social sciences.’ Research on health journals published in 13 African countries found that most journals were not indexed. Other valuable research may not appear in journals biased against non-Global North sources. Article processing charges levied on the authors or their institutions are further barriers to publication.

This is a complex problem. There may be relatively less research performed in the Global South, there may be biases against research from the Global South getting published through the current publication system (bias in the peer review system, inability to pay Article Processing Charges). There may also be structural biases in the current publication system that need to be overcome to allow research to be published in a timely and accessible fashion – this is a global problem, but exacerbated for the Global South.

The way that published research is indexed (and hence found through search engines) adds to the problem - the term bibliometric coloniality has been coined to refer to: 'the system of domination of global academic publishing by bibliometric indexes based in the Global North, which serve as gatekeepers of academic relevance, credibility, and quality. These indexes are dominated by journals from Europe and North America. Due to bibliometric coloniality, scholarly platforms and academic research from the African continent and much of the Global South are largely invisible on the global stage.'

This course explores two possible solutions to help achieve visibility for research through innovative publication and review systems. We will explore open publishing and open reviewing systems.

There are a number of new approaches to the review process as well as to the way in which research may be published. There are now ways for researchers to put their research reports online for all to access before they are reviewed, these are called preprints. Peer review is sought for these preprints in a process of open review. The author(s) can then review the paper according to the review. The paper may now be, submitted for publication to a more traditional journal, or remain available for open access by anyone interested. This is an evolving field, and we discuss this later in the course.

Course learning outcomes. Be able to:

- Understand the benefits of open reviewing and open publishing,

- Demonstrate the ability to post a preprint and an open review

How to navigate the course

Each section comprises a set of resources that we think you will find interesting - click on the collections of resources in each section. There is a forum in each topic for reflection.

We encourage you to reflect on what you have learned or comment on the course. When you click on the blue hyperlink in each topic labelled reflection, you will be able to add a new topic or respond to a previous one. You may want to share your learning from this and other readings, comment on the topics from your own experience, comment on others' posts, or provide feedback on how we can improve the content and presentation.

In the final section you will see that you can gain a Certificate of Completion - the requirements for this are to access the resources, post a reflection in each section, and upload a preprint to the BAOBAB repository in the COPPHA commnuity.

This is a self-directed course, to be taken at your own pace. We encourage you to reflect on the issues, maybe make notes as you go along as this is a good way to be sure that you can internalise the information presented and the lessons to learn from it.

This work is licensed under a Creative Commons Attribution 4.0 International License.

-

We need to set this discussion in the context of the broader movement to open science.

The UNESCO definition of Open Science: ‘Open science is a set of principles and practices that aim to make scientific research from all fields accessible to everyone for the benefits of scientists and society as a whole. Open science is about making sure not only that scientific knowledge is accessible but also that the production of that knowledge itself is inclusive, equitable and sustainable.’

There are many components of openness, as described by UNESCO as Open Solutions: ‘Open Solutions, comprising Open Educational Resources (OER), Open Access to scientific information (OA), Free and Open Source Software (FOSS) and Open Data, have been recognized to support the free flow of information and knowledge, thereby informing responses to global challenges.’

As discussed in the Peer reviewing course, and emphasised here, ‘the history and political economy that allows some academic publishers to charge high fees and make extensive profits while academics provide much of the editorial and review functions without payment, has been well described...There are various categories of open access, and it has been estimated that since 2010, 47% of the 42 million published journal articles and conference papers are openly accessible, while 53% are behind a paywall, either requiring a subscription or pay per use, although there is an increasing trend towards open publication...Journal articles, as well as books and reports, may be published under a Creative Commons licence making them available for free use by others, subject to various conditions...there is widespread global support for the notion that publicly funded research should be freely available and not hidden behind paywalls. There are already many examples of publications of public importance being made freely available on a voluntary basis – such as many of the publications relating to the Covid-19 pandemic which have been published as open access for the public good. In addition to the philosophical issue of making publicly funded research freely available, there are claims that research citations are greater for open access articles than those requiring payment or a subscription.’

A major problem in the move towards open publishing of research papers is that journals make open publication dependent on the payment of Article Processing Charges (APCs). These can be as high as $9000 for a paper in Nature. This, of course, is a particular burden for those in low income settings and in the absence of rich institutions or research funders who are prepared to cover these publication costs. We note that the Bill and Melinda Gates Foundation has now refused to cover these costs in their grants.

Hence the case for open repositories has been made, where researchers can upload their research, including the data on which the research is based. The allows open public access so that the results can be used by other researchers as well as policy makers. In addition, and as we discuss later, some repositories are linked to a review system to encourage reviews to be posted helping the paper to be improved and accepted by other publications.

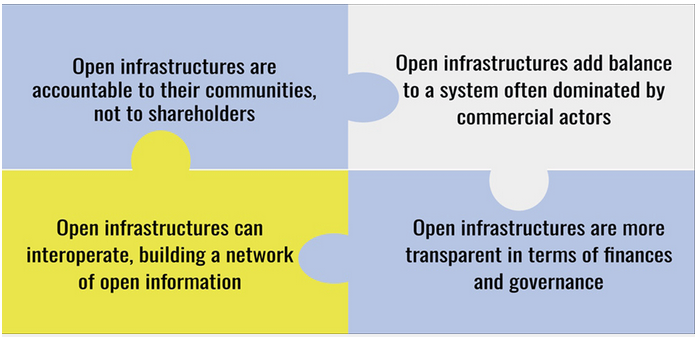

An editorial introducing a special collection Repositories transforming scholarly communication summarised this development nicely: ‘Digital repositories emerged in the 1980s to store and share research articles. In the early 2000s, the open access movement led to the creation of more digital repositories, including institutional repositories that allowed universities to share their research output with the public.’ The paper quotes the figure below in making the case for openness:

Figure: The importance of supporting open infrastructures for scholarly communications

There are a number of repositories in existence, and we list some of them later on.

Indexation and discoverability of research publications.

As mentioned in the Introduction to this course, the way that published research is indexed (and hence found through search engines) adds to the problem - the term bibliometric coloniality has been coined to refer to: 'the system of domination of global academic publishing by bibliometric indexes based in the Global North, which serve as gatekeepers of academic relevance, credibility, and quality. These indexes are dominated by journals from Europe and North America. Due to bibliometric coloniality, scholarly platforms and academic research from the African continent and much of the Global South are largely invisible on the global stage.' The authors propose a ‘process of dismantling bibliometric coloniality and promoting African knowledge platforms’ through ‘the creation of an African scholarly index’.

Central to this is the concept of Persistent identifiers (PIDs) ‘another crucial element of the scholarly communication ecosystem. PIDs serve as long-lasting references to digital objects, enabling easy and reliable access to research outputs of various types. PIDs can enhance the cohesion and discoverability of African scholarly content, ensuring that research remains accessible and traceable.’ Most will be familiar with Digital Object Identifiers (DOI) but there are others, such as Archival Resource Key (ARK) which has the advantage of keeping costs down as they can be implemented locally with open source tools.

Platforms and repositories

Digital repositories may be used to share the results of research and education. Here we are focusing on the use of repositories to publish preprints and in a later course we will cover their use to facilitate open reviews of these preprints.

The paper Next Generation Repositories from the Confederation of Open Access Repositories offers a good perspective on the importance of repositories.

Sciety aims "to grow a network of researchers who evaluate, curate and consume scientific content in the open." and offers repositories where evaluation of 'preprints' can occur.

eLife is eliminating accept/reject decisions after peer review and instead focusing on preprint review and assessment (more about eLife below).

PREreview (who we met previously for access to the resources they offer) is a platform providing 'ways for feedback to preprints to be done openly, rapidly, constructively, and by a global community of peers.'

medRxiv (pronounced "med-archive") is a free online archive and distribution server for complete but unpublished manuscripts (preprints) in the medical, clinical, and related health sciences. It provides a platform for researchers to share, comment, and receive feedback on their work prior to journal publication. Once posted on medRxiv, manuscripts receive a digital object identifier (DOI), so are discoverable, citable, and indexed by numerous search engines and third-party services. This is one of a suite of arXiv archives - 'a free distribution service and an open-access archive for nearly 2.4 million scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics.'

The Baobab repository has been developed in response to the need for an Africa specific repository. We will be using this to practice both the uploading of research papers as preprints, and of open reviews. Baobab uses the ARK identifier.

Focus on preprints

A good definition of preprints comes from the journal PLoS: 'A preprint is a version of a scientific manuscript posted on a public server prior to formal peer review. As soon as it’s posted, your preprint becomes a permanent part of the scientific record, citable with its own unique DOI.' The site goes on to show how to use preprints as a preparatory to submission to their journal.

eLife has an excellent summary of the reasons behind the preprint concept: 'The current science publishing system relies on a model of peer review that focuses on directing papers into journals. These reviews are not made publicly available, stripping them of their potential value to wider readers and leading committees to judge scientists based on where, rather than what, they publish. This can impact hiring, funding and promotion decisions, and highlights the need for a system of review that helps funding and research organisations assess scientists based on the research itself and related peer reviews.

Researchers globally are taking action to make science publishing and its place in science better, for example by preprinting their work and advocating for others to do so. This gives them greater control over their work and enables them to communicate their findings immediately and widely, and receive feedback quickly.'

You might also like to look at this from eLife: Scientific Publishing: Peer review without gatekeeping which describes why '..eLife began exclusively reviewing papers already published as preprints and asking our reviewers to write public versions of their peer reviews containing observations useful to readers' and that they 'found that these public preprint reviews and assessments are far more effective than binary accept or reject decisions ever could be at conveying the thinking of our reviewers and editors, and capturing the nuanced, multidimensional, and often ambiguous nature of peer review'. This approach is supported by the authors of Is Peer Review a Good Idea? from the viewpoint of the philosophy of science.

A provocative paper PreprintMatch: A tool for preprint to publication detection shows global inequities in scientific publication finds: ...that preprints from low income countries are published as peer-reviewed papers at a lower rate than high income countries (39.6% and 61.1%, respectively), and our data is consistent with previous work that cite a lack of resources, lack of stability, and policy choices to explain this discrepancy. They also found '...that some publishers publish work with authors from lower income countries more frequently than others.'Note: there are other suggestions of reviewer bias against journal submissions from the Global South: The role of geographic bias in knowledge diffusion: a systematic review and narrative synthesis. And North and South: Naming practices and the hidden dimension of global disparities in knowledge production.Here is another interesting idea: Preprint clubs: why it takes a village to do peer review where the authors (who are early career researchers in high income countries) describe turning their journal clubs into examining preprints and posting their analyses publicly. This could be replicable to include non-institutional and trans country online discussions.-

Reflect on your views on open access publishing and how this might be relevant in your own setting. Also, please comment on the posts of other participants.

Posting to this forum is a requirement for a certificate.

-

-

Open reviewing

One of the criticisms of the peer review system has been a lack of transparency. Reviewers are usually anonymous - this is to allow the reviewer to feel able to give a negative review without the author knowing their name. Conversely, some journals prefer to blind the reviewer to the names of the authors - hence the review is blind to author and reviewer or double blind. However the evidence about the value of this is mixed. A nice review Open versus blind peer review: is anonymity better than transparency? concludes: 'Although double-blind peer review has advantages in the reduction of specific biases, open peer review has the advantage of transparency.' Self-awareness among reviewers of their own unconscious biases and any deficits in the methodological expertise required for a review will help improve the quality of peer review across the spectrum, enhancing the quality of published biomedical research.' Two studies have compared blinding of the names of authors with non-blinding as well as allowing reviewers to remain anonymous. The first, The effects of blinding on the quality of peer review. A randomized trial, reported: 'Blinding was successful for 73% of reviewers. Quality of reviews was higher for the blinded manuscripts (3.5 vs 3.1 on a 5-point scale). Forty-three percent of reviewers signed their reviews, and blinding did not affect the proportion who signed. There was no association between signing and quality.' A subsequent study, Effect of Blinding and Unmasking on the Quality of Peer Review: A Randomized Trial, came to a different conclusion: 'Blinding and unmasking made no editorially significant difference to review quality, reviewers' recommendations, or time taken to review. Other considerations should guide decisions as to the form of peer review adopted by a journal, and improvements in the quality of peer review should be sought via other means.' These studies were both before the advent of preprints and their open reviews, and there do not seem to have been any recent trials.

Publishing research as preprints and the process of open peer review - which we discuss later in this course, does not allow blinding of authors as the whole paper is published as open access. The many advantages of this, including transparency, speed and increased access to research knowledge outweigh any potential benefits from the blinding process.

Open reviewing has become adopted by a number of journals. F1000 research was one of the early journals to do this, and you can read about how they go about peer review here.

The paper Ten considerations for open peer review is published in F1000 Research. Experience has accumulated since that paper, and one of the key features is that the corresponding author of the paper is asked to suggest the names of potential reviewers according to pre-set criteria and checked by the editorial staff before requests are sent out. The author of this course has published in F1000 Research a few times - the latest paper required 71 names to be submitted of whom 23 were rejected by the journal - mostly due to having too few publications. There was no response from 27, and 18 unavailable. All to get 3 people who submitted a review. From this anecdote, reviewers may find it difficult to be open about their reviews! Nevertheless, the advantages of open reviewing are considerable. The author has the opportunity to respond to the comments by the reviewer and amend the submission, for the reviewer to take another look and maybe change their recommendation. All this is open and available for readers to see, including each of the various submissions over the whole review process.

Case study

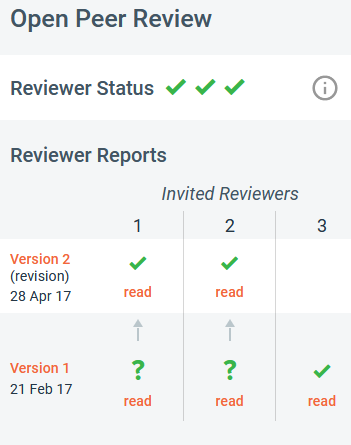

This case study shows the peer review process for the paper whose citation is: Heller RF, Zurynski R, Barrett A et al. Open Online Courses in Public Health: experience from Peoples-uni [version 2; peer review: 3 approved]. F1000Research 2017, 6:170 (https://doi.org/10.12688/f1000research.10728.2). In the side panel of the paper you will see this picture:

This shows that the reviews are open - the reviewers names are published as are their reports and the authors responses. The journal requires that at least two reviews feel that the paper is acceptable for the paper to be indexed (the paper is already published in its original state in this form of open publishing, but indexation means that it has met appropriate peer review quality standards and can be found in various journal indexation systems such as PubMed). On the first occasion, only one reviewer felt that the paper had met that criterion, and two still had some reservations. When the authors responded to the reviewers' comments and amended the paper, all three were happy that it met the quality standard.

You can access all the reports and responses and different versions of the paper. The course on Peer reviewing is deigned to help build skills in peer review, and explores the peer review process demonstrated in this paper in more detail. However, everyone is able to post your opinion in the forum below.

Boosting the value of preprint repositories by posting open reviews

Reprint repositories can have an added benefit by facilitating the publication of open reviews.

PREreview (who we met previously in the Peer reviewing course for access to the resources they offer) is a platform providing 'ways for feedback to preprints to be done openly, rapidly, constructively, and by a global community of peers.'

ASAPbio (Accelerating Science and Publication in biology), another contributor the field, 'is a scientist-driven nonprofit working to drive open and innovative communication in the life sciences. We promote the productive use of preprints for research dissemination and transparent peer review and feedback on all research outputs.' Their website makes an important point to emphasise the importance of this process: 'Science only progresses as quickly and efficiently as it is shared. But even with all of the technological capabilities available today, the process of publishing scientific work is taking longer than ever.'

We will come across the BAOBAB repository for preprints and other resoruces, and PublishNow which offers both a repository and an review process,later in this course. These are part of the development of a Coalition for an open access ecosystem for the publication of public health research in Africa (COPHHA). We will be using BAOBAB for the course exercises of posting a preprint and performing an open peer review.

-

Are you comfortable with the open peer review system? Do you think that the reviews shown in the case study were appropriate? How would you have reviewed the paper? Can you see the potential for open reviews of preprints?

Posting to this forum is a requirement for a certificate.

-

-

Introduction to Publish-Review-Curate (PRC)

The Publish-Review-Curate (PRC) model has emerged as an innovative response to the limitations of traditional scholarly publishing. Critics of the traditional model highlight issues such as slow dissemination, lack of transparency, inefficiency, and centralization of decision-making within a small group of editors and peer reviewers. These challenges have stifled diversity and innovation in the evaluation of research, while consuming significant resources with minimal impact on improving research quality.

The PRC model addresses these shortcomings by dividing the scholarly communication process into three distinct phases: Publish, Review, and Curate. This structure enables rapid dissemination, transparent evaluation, and a focus on the scientific quality of research articles rather than the prestige of the journals in which they are published. By decentralizing control and placing researchers at the forefront of the publication process, the model fosters greater inclusivity.

The Diamond Open Access ecosystem supports sustainable and inclusive publishing models, and the PRC approach is a key example. It operates effectively in low-resource environments, offering a viable alternative to traditional publishing. The model's flexibility allows it to support various editorial workflows, peer review methods, and research outputs. As more stakeholders embrace and refine it, the model could become a leading standard in global scientific communication.

The model is gaining traction across the scholarly landscape, with platforms and journals such as eLife, Peer Community In, JMIRx, and PREreview, among others, embracing this approach. Funders, including the Bill & Melinda Gates Foundation and the Howard Hughes Medical Institute, are also actively supporting PRC initiatives by providing financial backing and policy changes to incentivize adoption.

The PRC model emphasizes:

Publish: Researchers take control of the decision to publish their work as preprints, ensuring their work is openly available and discoverable.

Review: Reviewers evaluate preprints, addressing their strengths, weaknesses, and suggestions for improvement. The review reports are made openly available, posted alongside the preprint, ensuring transparency and accountability in the evaluation process.

Curate: Reviewed preprints are compiled into thematic collections, often accompanied by editorial assessments or judgments.

The Publish Phase in PRC

The publish phase in the PRC model empowers researchers to make their work publicly accessible, bypassing traditional journal gatekeeping. Research outputs are assigned persistent identifiers like ARK or DOI upon publication and made open access with clear metadata for discoverability and reuse which aligns with the FAIR priniciples. This phase promotes rapid dissemination, enhances visibility, and supports open science principles by ensuring research is accessible to a broader audience. The phase also ensures that shared work is freely available under open licenses, such as CC-BY (Creative Commons Attribution), promoting unrestricted access. Researchers can share their manuscripts through open-access repositories like BAOBAB or preprint servers such as bioRxiv, medRxiv, and other servers that fits their area of study allowing for decentralized dissemination.

The Review Phase in PRC

The review phase in the PRC model involves openly evaluating research outputs to provide constructive and actionable feedback on their strengths and weaknesses. Unlike traditional peer review, where reviews are often hidden, the PRC model emphasizes transparency and accessibility. Reviews are openly available, assigned persistent identifiers, and remain associated with the research output (e.g., preprints).

The Curation Phase in PRC

The curation phase involves organizing reviewed preprints or other research outputs to enhance discoverability based on their perceived quality. It includes compiling reviewed preprints into thematic collections and applying quality assessments such as endorsements or ratings. Curation ensures that valuable research remains accessible to specific audiences. A key feature of the curation phase is that research outputs published under the PRC model can be curated by multiple entities simultaneously.

Image Source: Hyde, A., Pattinson, D., & Shannon, P. (2022). Designing for Emergent Workflow Cultures: eLife, PRC, and Kotahi. Commonplace. https://doi.org/10.21428/6ffd8432.ef6691ea

PublishNow is designed to support PRC

PublishNow is an innovative open review platform operated by WACREN that implements the PRC model by enabling researchers to engage in the open review of preprints (preprints with a DOI or ARK) and other scholarly outputs. With ORCID integration, reviewers’ identities can be disclosed, promoting accountability in the review process. Reviewers also have the option to provide anonymous reviews if they choose. PublishNow offers intuitive tools for assigning reviews, monitoring review progress, and curating reviewed preprints into collections.

COPPHA has a special section on PublishNow which we will be using in the next two sections of the course.

An excellent resource is Understanding the Publish-Review-Curate (PRC) Model of ScholarlyCommunication 2024. Please take a look and comment on it in the discussion forum.

Exploring Two Models of PRC: A Comparison of eLife and Peer Community In (PCI)

Two Models of PRC with Validation Proposed by Coalition S

Coalition S emphasizes that the Publish-Review-Curate (PRC) model cannot operate effectively in isolation. To ensure rigor and quality, they advocate for incorporating a validation step into the PRC workflow, based on peer reviews. By validation we mean that ideas and evidence expressed in the article have been accepted by the scholarly community. Coalition S in a publication on “Peer-reviewed preprints and the Publish-Review-Curate model” proposes two approaches, both integrating this essential validation:

Publish-Review-Curate (Validation through Curation)

In this model, curation itself serves as the validation step, relying on peer reviews as the basis for this process. This approach mirrors the practices of some scientific journals today. For example Journals may publish peer-reviewed preprints and curate them further, leveraging reviews conducted by external services. Examples include PCI-friendly journals or journals associated with Review Commons, which integrate peer reviews from other services into their editorial and curatorial processes.

Publish-Review (Validation before Curation) - Curate

In this approach, validation is performed before curation through peer review and an explicit editorial decision, such as acceptance. Once validated, the article undergoes curation to enhance its value and impact. This approach is often reflected in the practices of traditional journals where validation precedes curation.

Why Coalition S Proposes Two Types of Publish-Review-Curate (PRC) Models

The proposal by Coalition S for two distinct PRC models stems from the recognition that curation and validation, while interrelated, serve different purposes.

Curation vs. Validation

Curation Defined: Curation involves the classification, selection, and highlighting of reviewed articles based on their perceived quality or relevance. It often serves to guide readers toward noteworthy or impactful research. Curation is typically qualitative and positive, selecting articles for inclusion in collections based on their merits.

Validation Defined: Validation is a more rigorous, binary decision-making process involving an accept/reject outcome based on peer review. It confirms that an article meets specific criteria for publication and scientific quality. Validation offers a clear signal to readers that the article has been evaluated and endorsed by a part of the scientific community.

The Issue with Ambiguity in Curation and Validation: Without clear validation (accept/reject), curated articles might mislead readers into believing they have undergone a thorough peer-review process when they may not have.

Comparing the Two PRC Models to eLife and PCI

The model is applied differently by organizations like eLife and Peer Community In (PCI), yet both share common elements, especially in how peer review and curation are integrated. Below is a breakdown of how these models work in practice and the differences between them:

1. eLife and the PRC Model

eLife's approach to the PRC model focuses on peer review and editorial assessment without a traditional accept/reject decision.

Key Feature: eLife’s model removes the traditional accept/reject decision and instead highlights the feedback through qualitative editorial input and public reviews, providing transparency without a clear binary validation. You can learn more about eLife’s model here.

2. PCI and the PRC Model

In contrast, PCI follows a clear binary validation process, providing a definitive editorial decision:

Key Feature: PCI’s model ensures clear validation of an article through its accept/reject decision, while curation follows once the article is accepted. The recommendation of an article is a positive editorial decision made by a recommender, based on at least two thorough peer reviews and following one or more rounds of review. You can learn more about the PCI model

.Key Differences Between eLife and PCI

eLife operates with a qualitative editorial process, not bound by a binary decision. Instead, it emphasizes public reviews and editorial feedback without a clear "accept" or "reject."

PCI operates with a binary validation system, where a definitive editorial decision is made, followed by public recommendations once the article is accepted.

-

Do you believe that a clear binary accept/reject decision is essential for maintaining the integrity of the publication process? Why or why not?

Posting to this forum is a requirement for a certificate.

-

This section of the course is for participants to practice how to post a preprint.

Recording of a Zoom session for information about how to post a preprint on BAOBAB. You can access the presentation here.

There are a growing number of servers on which to post preprints, such as medRxiv or PublicHealthRN, however we plan to use the new BAOBAB repository.

BAOBAB has a special section, or community, for public health - the Coalition for Open Publishing of Public Health in Africa (COPPHA).

You can post a full article as you would be submitting to a journal (research, commentary, review, letter etc). If you do not have such an article, you can post a presentation that you have made, or even a commentary about this course - anything. This is designed to demonstrate the system for posting preprints. In the next section. Your file will be considered as a preprint, and will be published after consideration by a moderator.

You can find a User Guide for BAOBAB here. The Coalition for Open Publishing of Public Health (COPPHA) section can be found here.

You may be asked for an ORCID ID to post to BAOBAB. ORCID stands for Open Researcher and Contributor ID. If you have not registered with ORCID yet, you will have to do so. It is very easy and you can access the instructions here.

The process:

Go to https://baobab.wacren.net/communities/coppha/records which will take you to the COPPHA section of BAOBAB.- Click on New upload. You will be prompted to sign up to BAOBAB

- You will then be able to upload your file and be asked to complete Basic information about the file: Resource type; Title; Authors. This is all you need to fill out - there is a subsequent section Recommended information, and you can complete some of that if you wish, but it is not necessary

- Scroll to the bottom and click on Submit for review.

- The COPPHA community moderator will review the file and if it is suitable it will be published and you will receive an ARK digital identifier. This publication is as a preprint and acceptance does not imply that it has been subject to peer review - the moderator will only have the role of checking that it is a suitable file.

-

There are two tasks in this forum.

1. Post your preprint on the BAOBAB repository in the COPPHA community section https://baobab.wacren.net/communities/coppha/records. You will have to sign up. Then upload the file that you have prepared as a preprint. The process is described above in the section of the course. Then post the title of the file you have uploaded to this forum.

2. Tell us briefly why you chose this document to upload as a preprint and describe it briefly.

-

This week, we will identify some of the ways in which you can post open peer reviews, and complete the course by reflecting on the peer review process, especially reflecting on the assignments posted during the COPPHA peer review course.

Part 1: Open reviews

We have discussed the potential value open peer review, as well as some stated reservations. You might want to explore these in some more detail in this post from OpenAIRE which identifies seven traits of open peer review:

Open identities: Authors and reviewers are aware of each other's identity.

Open reports: Review reports are published alongside the relevant article.

Open participation: The wider community to able to contribute to the review process.

Open interaction: Direct reciprocal discussion between author(s) and reviewers, and/or between reviewers, is allowed and encouraged.

Open pre-review manuscripts: Manuscripts are made immediately available (e.g., via pre-print servers like ArXiv) in advance of any formal peer review procedures.

Open final-version commenting: Review or commenting on final “version of record” publications

Open platforms: Review is de-coupled from publishing in that it is facilitated by a different organizational entity than the venue of publication.

We would like to draw your attention to the last point about open platforms.

PublishNow is a 'state-of-the-art scholarly publishing platform designed to streamline and enhance collaborative editing and comprehensive reviews.' It is part of the suite of open publishing opportunities, linked to COPPHA – we worked with the Baobab repository in the previous section of the course. The platform and has a section for posting open reviews of articles on Public Health. Each review will be given an ARK identifier. ARKs are a type of Digital Object Identifier (DOI) assigned to journal articles “Archival Resource Keys (ARKs) serve as persistent identifiers, or stable, trusted references for information objects. Among other things, they aim to be web addresses (URLs) that don’t return 404 Page Not Found errors. The ARK Alliance is an open global community supporting the ARK infrastructure on behalf of research and scholarship.”

PublishNow is in its later stages of development, with major upgrades being implemented. We hope to provide a demonstration of its use during the week. Once officially launched and open for use, all COPPHA Open Publishing and Peer Review graduates, as well as the broader COPPHA Community, will be notified. We hope to provide a demonstration of its use during the week.

In the meantime, we suggest that you explore MetaROR which “provides a platform that leverages the publish–review-curate model to improve the dissemination and evaluation of metaresearch”. Note: MetaROR “use the term metaresearch, or research on research, to refer to all fields of research that study the research system itself.” MetaROR uses a similar system to that planned for PublishNow so it is highly relevant.

On the MetaROR site you will find the interesting paper Preprint review services: Disrupting the scholarly communication landscape, which itself has been reviewed – and the reviews are open to view. Please explore this paper as well as the reviews, and we will discuss it in the forum.

Part 2: Reflecting on the assignments posted during the COPPHA peer review course

During the initial offering of the course Peer reviewing - from COPHHA, copied here where you can access and enrol on it, an assignment was required using a common proforma for making the reviews. The table below shows the distribution of the answers given by those who submitted a review – the answers highlighted in yellow were those given in the model answer derived from a consensus of three of the course tutors.

Question

Answer

N

% of 210 participants*

Does the abstract provide a concise summary of the study, including introduction, methods, results, and conclusions?

Yes

144

69

No

9

4

Partially

45

21

Do you have any concerns about the Introduction or Background to the study? These might include concerns about the literature review or the research question or hypothesis.

Major concerns

19

9

Minor concerns

80

38

No concerns

97

46

Do you have any concerns about the Methods section of the paper? These might include concerns about selection, measurement or confounding biases or the statistical analyses.

Major concerns

33

16

Minor concerns

86

41

No concerns

97

37

Do you have any concerns about the Results section of the paper? These might include concerns about selection, measurement or confounding biases or the statistical analyses - or if each table, figure or graph is fully understandable as a stand-alone.

Major concerns

22

11

Minor concerns

81

39

No concerns

94

45

Do you have any concerns about the Discussion or Conclusions section of the paper? These might include ethical concerns, usefulness or generalisability of the findings and if the study limitations have been adequately identified.

Major concerns

20

10

Minor concerns

74

35

No concerns

101

48

What is your recommendation?

Approve without revision

39

19

Approve, require minor revision

125

60

Approve, require major revision

28

13

Reject

5

2

Transparency and Reproducibility: Is the research process transparent, and are data or code available for others to reproduce your findings?

Yes

130

62

No

11

6

Partial

52

25

Not relevant

3

4

Language: Do you have any concerns about the writing style or use of language?

Yes

40

19

No

154

74

*% of all 78 submissions (some answers were left blank so % for each question may not sum to 100)

Please explore this table. The discussion forum will ask you to reflect on the differences between the model answers and those given by the participants.

Finally.

As you see, open publishing is a new and developing field. We hope that the two courses on peer review and open publishing have stimulated you to think about how we can improve both the quality of, and access to, scientific research globally. We hope that you will join COPPHA in its Community of Practice and contribute to further developments in the field.

-

We ask you to consider two issues in this forum:

1. Open review platform. What is your view of the use of open review platforms, you might refer to the paper Preprint review services: Disrupting the scholarly communication landscape which we presented above.

2. Reflecting on assignment answers. What do you think are the reasons for the differences between the majority of the participant responses and those given by the tutors in the model answers? In particular that only 13% of the participants suggested Approve with major revisions - which was the preferred response in the model answer.

-

In this section you are encouraged to gain a certificate - the requirements are to post to each of the forums and download the review proforma. While we ask you to upload a document to the BAOBAB repository and post the resulting url to the forum on posting a preprint, because this is an automated certificate we are not able to verify that you have actually uploaded to the repository and trust that you actually have done so.